2025-09-05: LLM Research Meeting

Participants: Entire research team members

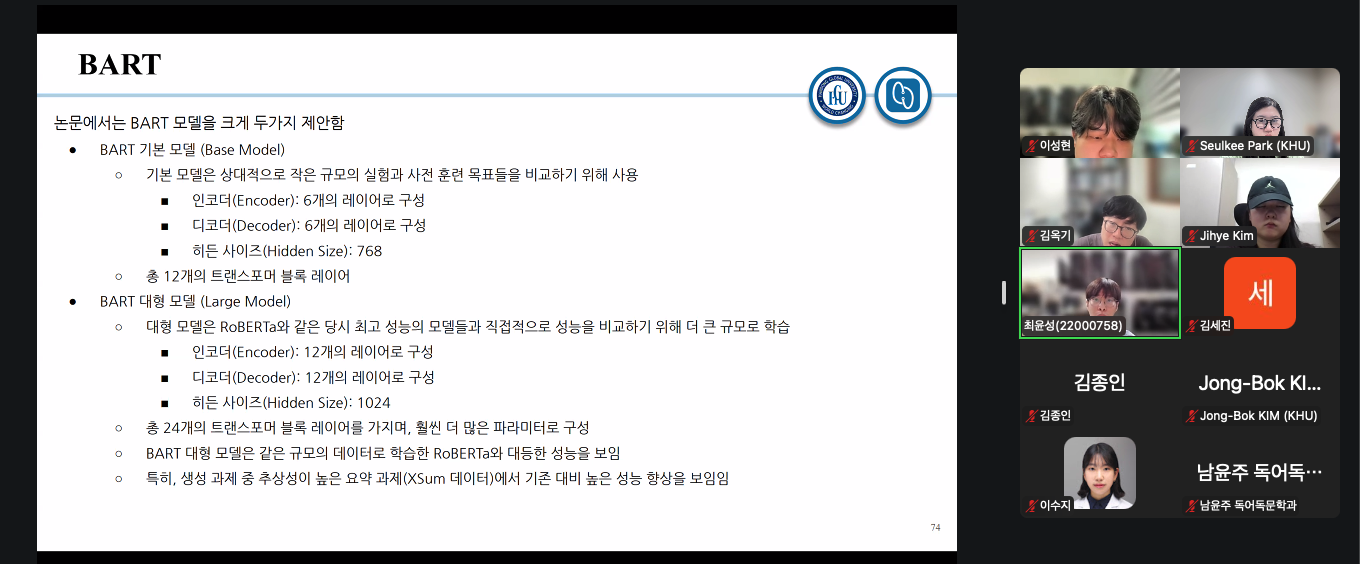

This session addressed fine-tuning techniques for downstream task models, exploring the application side of large language models. We discussed the mechanisms of pre-training and fine-tuning in BERT and GPT-style architectures, as well as strategies to enhance language-understanding performance.

Readings

- Korean Embeddings

- Hello, Transformer

- Attention Is All You Need

- BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

- Improving Language Understanding by Generative Pre-Training